The majority of companies within the pharmaceutical industry have large historical clinical databases, much of which may never be used beyond its original purpose: to prove that the drug in question is safe and efficacious. This historical data can be used to better inform future decisions in clinical trials. This article broadens from internal Phase I placebo analyses to include modern approaches for using historical and external controls and dynamic borrowing across phases.

A project was undertaken to develop a tool investigating subjects only receiving placebo in 82 Phase 1 single and multiple ascending dose studies between 2005 and 2012. We retain this as an internal case study (2005-2012) illustrating placebo behaviour and covariate effects and subsequent sections generalise to today’s broader data sources and methods. This project, undertaken entirely in R, had the aim of automating the production of a set of documents for clinical and non-data facing staff, providing information on observed data. The outputs covered clinical laboratory measurements, vital signs, electrocardiograms, and adverse events.

The use of large-scale meta-analysis of placebo subjects gives the opportunity to explore the factors linked with occurrences of adverse events or the effect on safety parameters without the interaction of study drugs. This information can be used to influence future study designs by identifying appropriate covariates and underlying causes. It also has the potential to build a Bayesian prior for interpretation of clinical drug effects in small Phase 1 studies. Using this prior information can reduce the overall costs and improve the certainty of decisions made during early drug development. In addition, sponsors increasingly use historical or external controls in carefully selected settings (e.g. rare or paediatric indications, life-threatening diseases, or very large effects). Success depends on trial comparability and method choice that attenuates borrowing when there is conflict between historical and current data.

Most worked examples below remain simulated for illustration. We also point to published use-cases where borrowing from historical data adaptively reduces weight when conflict is detected.

Reference Ranges

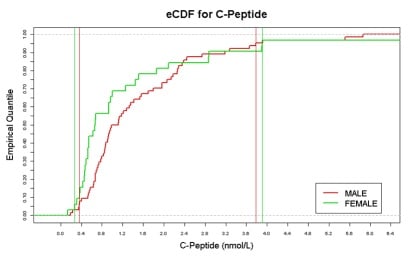

For tests where reference ranges are not available, placebo data gives information on the expected ranges with the ability to adjust for the effect of covariates, population structure and study type. An example is shown in Figure 1.

Figure 1: Example Empirical Cumulative Distribution Function (eCDF)

Today, reference distributions can be sourced from completed trial control arms, from real-world data such as electronic health records and medical charts, and from registries and natural-history studies, especially in rare diseases. Before pooling, align endpoints, assess heterogeneity and mitigate bias through protocolised extraction and pre-specified analysis plans, while planning for time trends and stage migration. When combining data across studies, align to CDISC SDTM/ADaM wherever possible, as industry collaborations on clinical data standards have accelerated cross-study interoperability.

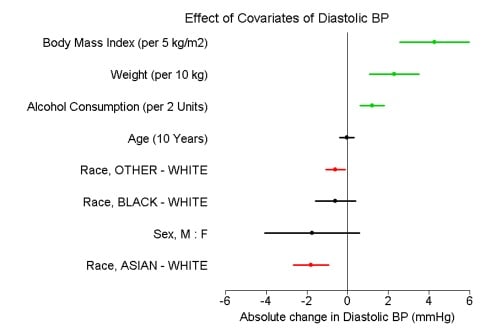

Covariate Analysis

Early-stage clinical trial populations are different to the general population. In Figure 2, the effect of different covariates fitted from a series of single factors models are estimated from the historical phase I population.

Figure 2: Summary of six single factor covariate models for vital signs with 90% CI.

When borrowing across studies, add simple comparability diagnostics: baseline balance (e.g. standardised differences), overlap/propensity diagnostics, and pre-specified sensitivity analyses.

A practical comparability quick check is to confirm alignment on population and inclusion criteria, endpoint definitions and standard of care, and to examine time trends, stage migration and the geography/site mix.

Problems to Overcome

Due to the nature of the data, there were many problems to overcome.

Standardisation of laboratory units remained a challenge, with more than 180 tests across countries using different assays and units and no single conclusive source for SI units; in 2025 this is mitigated by prioritising CDISC/CFAST therapeutic-area standards and shared codelists to reduce harmonisation effort.

Classifying studies required study-type covariates to distinguish single from multiple dose designs and to tag special populations, a task now streamlined by leveraging shared control-arm metadata from cross-company historical-trial data sharing.

Identifying unique subjects was complicated by participants appearing in multiple studies; applying cross-study de-duplication rules and documenting privacy-preserving linkage assumptions now addresses this risk.

Regulatory note: Regulatory confidence hinges on design rigour—pre-specification of assumptions, simulations to examine operating characteristics, and planned sensitivity analyses are expected where external controls supplement or replace a concurrent control. Early agency dialogue is recommended.

Methods for Borrowing from Historical Data in Clinical Trials

Power priors and their modified variants form a prior from the historical likelihood, with a down-weighting parameter between 0 and 1 controlling influence; they are straightforward to apply but can be sensitive to unmodelled heterogeneity.

Meta-analytic-predictive (MAP) priors explicitly model between-trial heterogeneity, and robust-MAP adds a weakly informative mixture so that borrowing attenuates when conflict is detected.

Commensurate priors centre current parameters on historical information via a commensurability variance, shrinking towards the historical estimate only when consistency is supported, and they extend naturally to generalised linear mixed models and time-to-event data. In practice, dynamic borrowing approaches that adapt to agreement or conflict are preferable to static pooling.

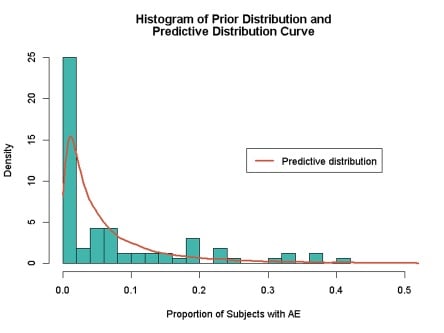

Using Data as a Bayesian Prior

Historical data can bring huge benefits when used in a Bayesian analysis as prior information, reducing overall costs and improving the certainty of decisions made during drug development. Beyond simple informative priors, practical options include modified power prior, MAP/robust-MAP and commensurate priors, which model heterogeneity and automatically down-weight conflicting historical evidence.

Adverse event data can be used to create predictive distributions to give information on the expected rates of AEs within a new study. Figure 3 gives a 95% credible interval of 0.2% to 24.8% for the proportion of subjects in any new trial having the AE of interest.

Figure 3: Prior and predictive distribution for occurrence of AEs

In simulation, assess Type I error, power and bias/MSE across plausible heterogeneity scenarios, and examine sensitivity to prior weighting and, for robust-MAP, the chosen mixture weight.

When to Use External/Historical Controls

External or historical controls are most appropriate in rare or paediatric indications, in life-threatening diseases where concurrent randomisation is infeasible, and when effects are very large or objective, including some device or label-expansion contexts.

Planning should define comparability criteria, align endpoints and pre-specify analyses, with operating characteristics simulated under plausible heterogeneity and time trends and sensitivity analyses such as trimming, weighting, leave-one-out and robust-MAP mixtures.

Good practice is to document how external controls were selected, justify similarities and differences and report both primary and sensitivity results transparently.

Operating Characteristics & Simulation Tips

Stress-test designs by varying between trial heterogeneity and prior-current conflict, examine worst case Type I error across the grid, and ensure acceptable power. For decision-making in early development, report how dynamic borrowing alters go/no-to probabilities relative to non-borrowing baselines.

Conclusion

This blog shows that historical placebo data can provide prior information for Bayesian analyses, place observed study data in a broader context, quantify covariate effects and establish reference ranges. In selected scenarios where randomisation is impractical or unethical, carefully chosen external controls can complement or, in rare cases, substitute for concurrent controls when supported by strong design, simulation and sensitivity analysis. Industry initiatives on historical-trial data sharing and clinical-data standards are reducing practical barriers and enabling safer, more efficient reuse of control-arm information.

Quanticate’s statistical consultancy team turns historical and external controls into decision-ready evidence, designing dynamic borrowing strategies, running simulations to protect Type I error, and delivering transparent analyses that meet global regulatory expectations. Submit an RFI below to accelerate development with confidence.